How to Reduce Risk of Getting Proxies Blocked

If your job is web scraping, then perhaps you know that you may be faced with two things. The first is legal considerations and blockages. The two concepts are closely related, although the debate about the legality and ethics of parsing continues and does not subside.

In this article, we will tell you how to use a proxy without blocking websites. Let's dive into the topic.

Methods that will help you prevent proxies from blocks

Here are examples of ways that will allow you to prevent possible blocking and enable you to enjoy your work on the Internet.

Respect the website

Behave yourself well and follow the website crawl guidelines — it’s the main method how to bypass proxy blocking. This will allow you to achieve the best results. Most resources have a robots.txt file, which specifically specifies things that can and cannot be done when working with a resource.

Another document you should also look at is the site's Terms of Service (ToS). There you will likely find definitions of whether the data on the site is public or copyrighted, and how to access the server and the data on it.

Large sites can and will allow data collection. The resources just set some conditions under which web scraping should take place. If you are within the permitted limits, then your actions will not lead to IP blocking. Following the web resource rules also leads to an increase in the culture on the Internet.

Multiple real user agents

There is important information in the header of the HTTP request of the user agent. The data can include the type of application, operating system, software, and version.

Common user agents contain a number of popular settings. A blank or unusual user agent is a kind of red flag for the target site. Also, if there are a lot of requests coming from the user agent, then this is also a bad idea. This can lead to blocking.

Use real user agents to avoid being blocked. Be sure to rotate. If the site finds that a significant proportion of the incoming traffic is similar to each other, then it can block user agents. Remember to check the technical specifications of the proxy servers to make sure they meet your requirements for bypassing proxy blocking.

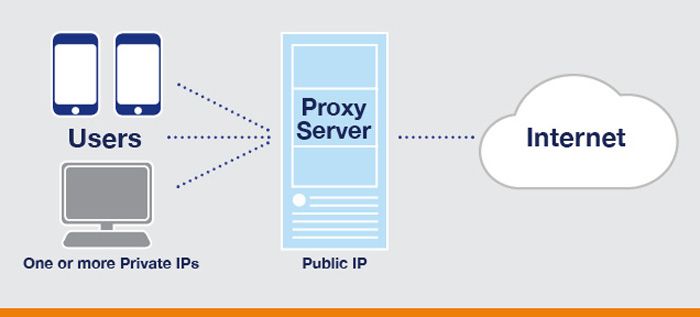

Rotating IPs

It’s a well-known fact that making a large number of requests from the same IP address is the way to block it. For web scraping, you need to use several proxies, that is, rotate. This’s a good opportunity not to be blocked.

This will allow you to perform various tasks related to data mining. No resource can block you. Even if he blocks one IP address, you will have a whole pool of IP addresses that you can use to get the necessary data.

Scrape At a Slower Speed and Rate

The bots used for parsing retrieve data from the website very quickly. The speed of their work exceeds the human speed of data processing many times over. This immediately arouses suspicion, like any other behavior that is different from that of a person. Also, a large number of simultaneous requests can lead to increased traffic and server downtime.

The best solution is to slow down the parsing bot. Adjust the interval. Using fewer concurrent requests is also a good practice.

It’s proved: the best delay between requests ranges from 3 to 10 seconds. Such a frequency of requests wouldn’t seem suspicious, which means that your IP will not be blocked, and you will be able to solve all the tasks.

Randomize Your Crawling Pattern

Regularity in navigation, the presence of one single movement pattern is what the bot gives out. As with other methods, it's best to set it up to randomly move and perform actions like scrolling and clicking. The more non-standard a bot behaves, the more it will look like an ordinary person.

Referers

A referrer is another piece of information that you provide to the site on which you are scraping. This information can be used by the site to understand what you are doing.

The referrer is the place from where you came to the site. If you go to a new tab in your browser, type www.google.com and hit enter, it will show up as direct traffic without a referrer.

It is best to visit the homepage of the site first. Entering the full search string makes it less believable. This will be a kind of threatening sign.

Request Headers

Genuine web browsers will have many different headers set, any of them can be checked by websites to block your parser. It’s the way to bypass proxy blocker.

To make your parser look more realistic, you can copy all headers from special resources. These are the headers your browser is currently using.

For example, setting “Upgrade-Insecure-Requests”, “Accept”, “Accept-Encoding” and “Accept-Language” will make it look like your requests are coming from a real web browser.

Conclusion

The listed methods will help you bypass proxy blocking. Contact Litport and try our solutions. We use our equipment, so you can only use high-quality proxy servers around the world. Our solutions are completely legal and confidential.