Why Scrape Gstatic.com and What Is Its Purpose? An In-Depth Look

TLDR: key takeaways

- Gstatic.com is Google's content delivery network that stores static content like images, JavaScript, and CSS to speed up the loading of Google services.

- Web scraping Gstatic.com can provide insights for web development, research, business intelligence, and content creation, but must be done ethically and legally.

- Effective techniques for scraping Gstatic.com include using the right tools, handling dynamic content, and managing rate limits while following best practices like using proxies and caching data.

Ever wondered about the magic behind the lightning-fast loading of Google services? The answer lies in Gstatic.com, Google's pivotal domain for content delivery. This often unnoticed powerhouse ensures your seamless experiences on platforms like Gmail and Google Maps. In this comprehensive guide, we'll take a deep dive into Gstatic.com, exploring its purpose, structure, and the intricacies of web scraping it ethically and efficiently.

Understanding Gstatic.com

Gstatic.com is a domain owned by Google that plays a critical role in the digital ecosystem. It functions as a content delivery network (CDN) designed to aid in the faster loading of Google's content from servers located globally. The primary purpose of Gstatic.com is to store and deliver static content such as:

- Images - Icons, logos, and other visual elements used across Google services

- JavaScript - Scripts that power various functionalities on Google's websites

- CSS - Stylesheets that define the look and feel of Google's web properties

By centralizing this content, Gstatic.com minimizes the volume of data transmitted over the internet, thereby accelerating the loading speed of Google's services. So when you access Gmail or Google Maps, Gstatic.com works behind the scenes to ensure a quicker and more efficient user experience.

Another key aspect of Gstatic.com is its use of subdomains. Rather than being a singular website, it comprises several subdomains, each tailored for a specific purpose. These subdomains work together to enable the seamless operation of Google's vast array of services.

The Structure of Gstatic.com

If you try to directly access the root domain of Gstatic.com, you'll encounter a "404 Not Found" error. This is expected behavior, as Gstatic.com is primarily a CDN and not designed for direct browsing. However, understanding its structure is crucial for effective web scraping.

Here are some key characteristics of Gstatic.com's structure:

| Content Type | Primarily hosts static content like images, JavaScript, and CSS |

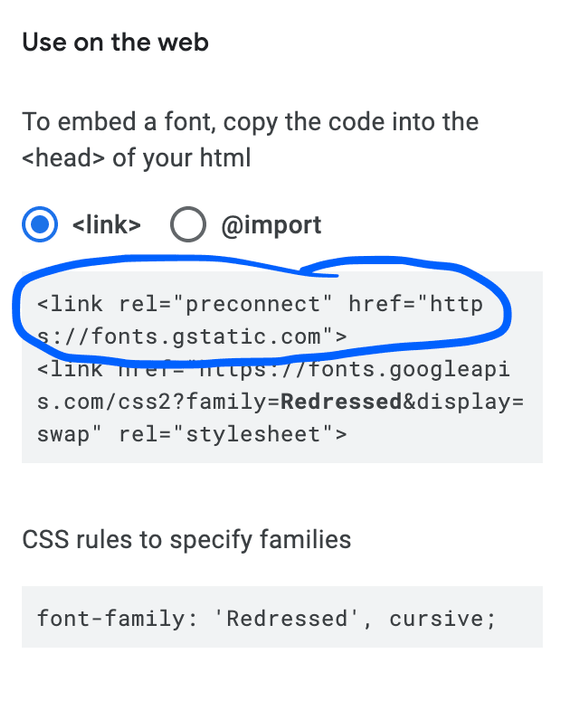

| Subdomains | Uses various subdomains to organize and serve specific types of content, e.g., fonts.gstatic.com for Google Fonts |

| Optimization | Resources are optimized for fast delivery through techniques like minification and compression |

| Caching | Leverages browser caching by setting long cache lifetimes for resources |

| Global Distribution | Utilizes a CDN to distribute content across multiple servers worldwide, reducing latency |

Gstatic.com's Purpose and Functionality

Now that we've grasped the structure of Gstatic.com, let's explore its core functionalities and the role it plays in optimizing the performance and reliability of Google services:

- Speed Enhancement: By storing static content, Gstatic.com significantly reduces the time taken to load Google services like Gmail and Maps.

- Bandwidth Optimization: Hosting static resources on Gstatic.com decreases bandwidth usage by reducing the amount of data sent over the internet.

- Network Performance: Gstatic.com ensures efficient delivery of Google services without unnecessary delays, enhancing overall network performance.

- Static Data Storage: It serves as a repository for essential static components like JavaScript libraries and stylesheets required for websites to function correctly.

- Internet Connectivity Verification: Gstatic.com assists in verifying internet connections, particularly when using Chrome or an Android device.

Additionally, Gstatic.com encompasses several subdomains, each serving a specific purpose. For instance, fonts.gstatic.com handles requests to the Google Fonts API, while maps.gstatic.com enables embedding Google Maps images without the need for JavaScript or dynamic page loading.

It's worth noting that while Gstatic.com is a legitimate Google service, there have been instances of cybercriminals creating counterfeit versions of the domain to install unwanted applications and adware. Therefore, always ensure the authenticity of the domain and be cautious of suspicious activity.

Why Scrape Gstatic.com?

Given that Gstatic.com primarily delivers content, it consists mostly of paths to various static files. The URL structure typically follows the pattern https://www.gstatic.com/ followed by the file path, which can be several directories deep. While these paths may appear random and unorganized to an outside user, they serve the purpose of efficient content delivery.

Despite the lack of a conventional website structure, scraping Gstatic.com can still provide value in several use cases:

- Web Development and Testing: Developers may scrape Gstatic.com to understand how Google structures its static content or to test the performance of their own applications when fetching external resources.

- Research: Researchers can study web optimization techniques, content delivery networks, or other technical aspects of web delivery by scraping the domain.

- Business Intelligence: Data scraping can provide insights into market trends, customer preferences, and competitive analysis. For example, scraping Google's "COVID-19 Community Mobility Reports" can shed light on mobility patterns across regions and time.

- Content Creation: Scraped data can be used to generate content for websites, blogs, or news articles. Scraping Google Alert emails, for instance, can help create newsletters by summarizing relevant stories.

- Lead Generation: Businesses can scrape public data sources to identify potential customers or sales leads in specific industries or niches.

- Content Retrieval: If a particular piece of content, such as an image or script, is known to be hosted on Gstatic.com, scraping can be used to retrieve it. However, this is less common as most content on the domain is meant to support other Google services rather than stand alone.

It's important to note that like any other website, Gstatic.com could potentially be targeted by malicious actors seeking vulnerabilities or gathering information for nefarious purposes. However, given Google's robust security measures, such attempts are likely to be thwarted.

Legal and Ethical Considerations

When scraping Gstatic.com, it's crucial to respect Google's terms of service (TOS) and ensure compliance with applicable laws and regulations. Violating the TOS can lead to legal consequences and is generally considered unethical.

Here are some key legal and ethical considerations to keep in mind:

- Copyright Concerns: While raw data can be scraped, the presentation of that data on a website may be protected by copyright laws. Ensure that your scraping and use of the data doesn't infringe on any copyrights.

- Data Protection and Privacy: If the scraped data includes personally identifiable information (PII), be aware of data protection regulations like GDPR and CCPA. Non-compliance can result in hefty fines and legal repercussions.

Approaching web scraping with a clear understanding of both legal and ethical considerations is not only the right thing to do but also ensures the longevity and legitimacy of your scraping projects.

Techniques for Scraping Gstatic.com

To effectively scrape Gstatic.com, you need to select the right tools and techniques. Here's a step-by-step guide:

- Choose a Programming Language and Framework: Python is a popular choice for web scraping due to its simplicity and powerful libraries like Beautiful Soup and Scrapy. Beautiful Soup excels at parsing HTML and XML, while Scrapy provides a comprehensive framework for building web scrapers and crawlers.

- Identify the Target Data: Before starting, clearly define the specific data you want to extract from Gstatic.com. Understand the website's structure and the location of the desired data.

- Inspect the Website Structure: Use browser developer tools to view the source code and identify the HTML tags containing the data you want to scrape.

- Select the Scraping Method: If Gstatic.com offers an API with the required data, prefer using it over web scraping, as it's more efficient and less likely to violate terms of service. Resort to web scraping only if an API is unavailable or provides limited access.

- Implement the Scraping Code: Use libraries like Beautiful Soup or Scrapy to define how the site should be scraped, what information to extract, and how to extract it. For example, Scrapy uses spiders to specify the crawling and extraction process.

Before scraping Gstatic.com or any other website, always check the robots.txt file to determine if scraping is allowed. Some websites prohibit scraping to prevent server overload and traffic spikes.

Cleaning and Preprocessing Scraped Data

Raw scraped data often contains irrelevant information, errors, or missing values that need to be addressed before analysis. This may involve removing broken links, correcting errors, or converting the data into a suitable format.

For instance, when scraping images, you might encounter broken links or irrelevant results. Tools like Google Apps Script can help retrieve specific data, such as favicons, and save them directly to platforms like Google Drive.

Preprocessing text data may include tokenization, removing stop words, or stemming to prepare it for analysis.

Extracting Insights from Scraped Data

The techniques used to analyze scraped data depend on the nature of the data and the objective of the analysis. For example:

- Scraped images can be categorized using image recognition algorithms.

- Textual data can be analyzed using natural language processing (NLP) techniques to understand sentiment, extract entities, or identify themes.

Dealing with Anti-Scraping Measures

Websites often employ anti-scraping measures to protect their data, ensure a good user experience, and prevent server overload. Common anti-scraping measures include:

- Bot Access Restrictions: Some sites explicitly forbid automated data collection in their robots.txt file. If Gstatic.com disallows scraping, respect this directive or seek permission from the website owner.

- CAPTCHAs: These tests differentiate between human and automated access. CAPTCHAs can disrupt the scraping process, but CAPTCHA solving services can help overcome this challenge.

- IP Blocking: Websites may block IP addresses that make an unusually high number of requests. Using proxy servers to make requests from different IP addresses can circumvent IP blocking.

Handling Dynamic Content and JavaScript Rendering

Dynamic content refers to web page elements that load or change based on user interactions, server-side logic, or external factors. Traditional scraping methods may not capture this content since it's loaded asynchronously using JavaScript.

To handle dynamic content, use headless browsers that can be automated to navigate websites, interact with dynamic elements, and render JavaScript just like a regular browser. This ensures that all content, including JavaScript-rendered elements, is loaded before scraping.

Managing Rate Limits and IP Blocking

Rate limits restrict the number of requests a user or IP address can make to a website within a specific time frame. Exceeding these limits can lead to temporary or permanent IP bans. To manage rate limits:

- Introduce random intervals between requests to make the scraping activity appear more human-like and less suspicious.

- Avoid scraping during a website's peak hours when there's higher traffic, as it can strain the servers and increase the chances of IP blocking.

Ready to try?

Best Practices for Scraping Gstatic.com

To ensure efficient, ethical, and legal data extraction from Gstatic.com, follow these best practices:

- Use Proxies and Rotate IP Addresses: Rotate IP addresses and use proxy services like residential proxies from Geonode to avoid detection and potential blocking. This masks your identity and minimizes the risk of getting blacklisted.

- Cache and Store Scraped Data Efficiently: Parse extracted data into a structured format like JSON or CSV for easy analysis. Regularly verify parsed data to ensure accuracy. Cache scraped data to reduce the load on the website's servers and enhance scraper performance.

People Also Ask

Is Gstatic.com a virus?No, Gstatic.com is not a virus. It's a legitimate domain owned by Google used to host static content for Google services to reduce server load and increase web performance.

Is Gstatic.com a tracker?Gstatic.com itself isn't a tracker. It serves static content for Google services. However, interactions with the domain might be monitored as part of Google's broader tracking network for improving user experience and targeted advertising.

Why do I have Gstatic.com on my iPhone or Android?If you see Gstatic.com on your device, it's likely because you've visited a Google service or a website that uses Google's infrastructure. The domain helps load content faster by delivering static files, ensuring a smoother online experience.

Conclusion

As websites evolve in complexity, scraping them becomes more challenging. Some sites employ anti-crawler systems to deter scraping bots. However, robust web scraping tools can navigate these challenges and extract data successfully.